Do websites take the mick?

Back when I worked for Littlewoods, I was part of the team running BetDirect, an online gambling platform. We had extremely strict brand guidelines and equally stringent web policies.

The homepage of the site had to be less than 2MB to download, and the page needed to render in under 2000ms (2 seconds).

Our customers enjoyed a very fast experience navigating the sportsbook, which could handle constantly changing odds with ease. At the time, this also helped with SEO tricks to get us featured "above the fold" on Google, as they used to say. Essentially, we aimed to be the number one result on page one for gambling in the UK.

One clever approach we took was running the BetDirect login (username and password) in an iFrame under SSL (now TLS), while the rest of the site wasn’t SSL-secured. The load balancers of the time used two production servers, but rendering the entire site in SSL would add a whopping 500ms to the load time. Every millisecond was accounted for and used carefully to ensure the site remained as fast and efficient as possible.

I remember when we got a pair of F5 load balancers—they were fantastic. You could upload your £500 wildcard SSL certificate (complete with the green address bar), and the load balancers would handle all the encryption and decryption for you.

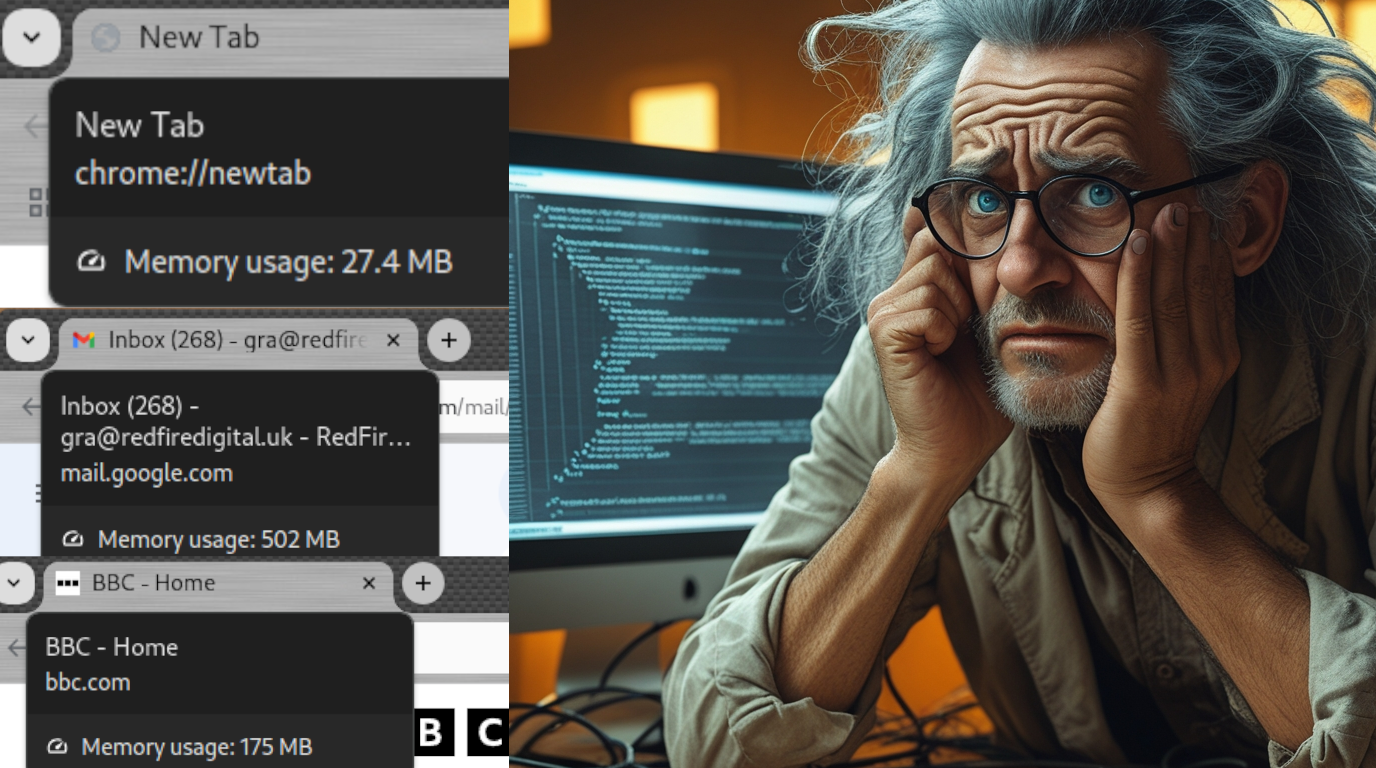

Fast forward to today, and these problems are largely a thing of the past. But because they’ve been solved, websites now take the mick. It’s common for even simple websites to exceed 100MB. I understand that platforms like Google Maps and YouTube require significant resources due to their media-heavy nature. But 500MB of RAM for Gmail? Really? It’s mostly just lines of text and a few images.

Is this the result of bad coding practices or the consequence of compounding JavaScript library versions, leading to bloated “out-of-the-box” functionality?